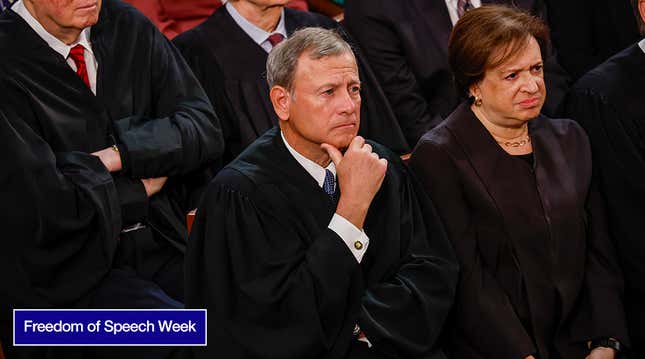

U.S. Supreme Court justices may be many things, but they definitely aren’t social media experts. The justices roasted themselves during oral arguments Tuesday of a case against Google that could determine the fate of nearly all speech online. Tech companies and advocates fear a ruling against Google could fundamentally alter the way the internet works and lead to a “horror show” of offensive, unhelpful content. Justices listening to the arguments were very, very confused.

“We really don’t know about these things,” Justice Elena Kagan said. “These are not like the nine greatest experts on the internet.” The courtroom erupted in what sounded like nervous laughter.

Experts or not, those nine justices are tasked with determining whether or not Section 230 liability protections extend to recommendation algorithms. Those protections, written in 1996 as part of the Communications Decency Act, prevent online platforms from facing lawsuits if a user posts something illegal and simultaneously shields them from legal liability for moderating their own content. Section 230 is traditionally understood to apply to “third-party content” on a platform, but attorneys suing Google claim the company’s algorithmic recommendation process is akin to creating its own content.

“Isn’t it better to keep it [Section 230] the way it is?” asked Brett Kavanaugh.

Argument over YouTube thumbnails leaves justices confused

The case in question, Gonzalez v. Google, stems from a lawsuit filed by the parents of a 23-year-old college student named Nohemi Gonzalez who died during 2015 Paris ISIS attack that left 129 people dead. Gonzalez’s parents’ suit alleges Google aids and abets terrorists by including suspected terrorist content in its recommendation algorithm. The plaintiff alleged Google is liable under the Anti-Terrorism Act. Eric Schnapper, an attorney representing the parents, tried to illustrate that point before the court by pointing to YouTube thumbnails which he claimed were at least partly first party content since they include a URL and image generated by Google.

“Our contention is [that] the use of thumbnails is the same thing under the statute as sending someone an email and saying, ‘You might like to look at this new video now,’” Schnapper said.

If anyone thinks that sounds like a stretch, you’re not alone. Multiple justices were left scratching their heads during the roughly two hour oral argument.

“I admit I’m completely confused by whatever argument you’re making at the present time,” Justice Samuel Alito said. Newly appointed Justice Ketanji Brown Jackson echoed that sentiment, admitting she was “thoroughly confused,” by Schnapper’s argument.

“So they [social media companies] shouldn’t use thumbnails at all?” Alito asked.

Schnapper then tried to dodge questions from justices asking if a ruling in his favor could have unintended consequences for otherwise innocuous content recommended through algorithms. Multiple justices, including Donald Trump’s appointee Brett Kavanaugh, said removing Section 230 protections from recommendations could potentially open companies up to a dizzying array of unrelenting lawsuits. That could make it unrealistic for companies to host and take down even moderately controversial content.

“You are asking us to make a very precise judgment,” Kavanaugh said. Both Kavanaugh and Kagan expressed trepidation over whether or not the Supreme Court should weigh in at all, adding that Congress may be better equipped to decide recommendation algorithm’s fate.

“We’re a court,” Kagan said. “We really don’t know about these sorts of things.”

The Supreme Court’s ruling could turn social media into ‘The Truman Show versus the horror show’

Tech companies and Section 230 proponents argue removing liability protections for recommendation algorithms would fundamentally alter the way the internet currently works and could force social media companies to engage to rigorous levels of self-censorship or over-enforcement. Supporters of wide protections, like the Electronic Frontier Foundation, say social media companies may opt to simply avoid hosting any important but potentially controversial political content to avoid lawsuits. Others may decide on an anything goes, chaos-filled platform. Lisa Blatt, a lawyer representing Google, told the court that reality would leave internet users left choosing between “The Truman Show versus the horror show.”

“The internet would’ve never gotten off the ground if anybody could sue at any time and it were left up to 50 states’ liability regimes,” Blatt added.

Retweets, likes, and chatbot hallucinations could all lead to lawsuits

Though much of the debate surrounding the extent of Section 230 protections focuses on consequences for tech companies, the oral arguments shone a spotlight on the potential downstream effects for everyday users as well. Responding to questions from Amy Coney Barrett, Gonzalez’s attorney Schnapper admitted a ruling in his client’s favor could mean regular users’ retweets or likes would not receive liability protection under Section 230, since both of those actions would technically count as new, generated content. That means an errant retweet, theoretically at least, could lead to a lawsuit.

“That’s content you’ve created,” Schnapper said, referring to the retweeter.

If that legal theory wins the day, things could get a lot more complicated online, particularly in the age of advanced chatbots and generative artificial intelligence. Justice Neil Gorsuch raised that point during the oral arguments, saying he did not believe chatbots, such as OpenAI’s ChatGPT, should be entitled to Section 230 protection since they are creating “new” content. Under that framework, companies could potentially be open to lawsuits for harmful or false information blurted out by an AI system.

“Artificial intelligence generates poetry,” Gorsuch said. “It generates polemics today that would be content that goes beyond picking, choosing, analyzing or digesting content. And that is not protected.”

Though the court didn’t seem all too convinced of the wavering arguments offered by Gonzalez’s lawyer, they weren’t necessarily totally sold on the idea Section 230 protection inherently extends to recommendations. Justice Jackson voiced skepticism over whether two-decade old Section 230 protection could have predicted the recommendation algorithm.

“Isn’t it true that the statute had a more narrow scope of immunity than courts have ultimately interpreted it to have, and that it was really just about making sure that your platform and other platforms weren’t disincentivized to block and screen and remove offensive content?” Jackson asked.

Democratic Sen. Ron Wyden and former Republican representative Chris Cox, the original authors of Sections 230, diverted from that point in a filing to the court in Google v. Gonzalez where they said recommendation systems are an example of “more contemporary method of content presentation.”

“Congress drafted Section 230 in a technology-neutral manner that would enable the provision to apply to subsequently developed methods of presenting and moderating user-generated content,” the lawmakers wrote.

The justices will reconvene on Wednesday to hear arguments for the case Twitter v. Taamneh, which similarly focuses on whether tech companies are liable, both under Section 230 and under the Anti-Terrorism Act.